Building a Better Claude Workflow

MCP Servers, Sub-Agents, and the Joy of Custom Commands

Imagine telling Claude to start working on a feature before you log off for the night — and coming back the next morning to a polished pull request, tested, reviewed, and ready for human eyes. This post explores how I built that kind of workflow using MCP servers, sub-agents, and custom Claude commands to orchestrate the entire development process.

Escaping the Overstuffed Prompt

As I work with LLMs on a daily basis, I’ve noticed how easily they can get overwhelmed as prompt size and context grow. Once a prompt grows past a certain size, models start to blur boundaries and lose track of sequencing. You can see it happen. First it skips a subtask. Then it merges two instructions. Then it forgets the output format entirely.

It’s not that LLMs can’t reason about complex instructions, they just don’t handle sprawling, multitask prompts well. Each additional step increases the chance of confusion or omission. AI companies have acknowledged this issue and do offer insights on how to succeed despite it.

So I stopped treating Claude like a single, all-knowing agent and started thinking of it as a system of smaller, focused agents that work together.

MCP Servers: Giving Claude Access to the Outside World

MCP servers are how Claude reaches beyond the model itself. They provide tools for interacting with external systems: internet search, project trackers like Linear or Jira, databases, internal APIs, web access, and even browser automation.

In my workflow, I use a variety of them, including Playwright, Chrome DevTools, and Vercel MCP servers:

Playwright runs automated end-to-end tests to verify user flows.

Chrome DevTools allows Claude to open my app locally and validate that UI changes work in a live browser.

Vercel handles CI/CD and deployments, giving Claude the ability to check build status, trigger redeploys, and confirm the latest version is live.

Together, these tools make Claude capable of doing real, production-level work. I currently have MCP servers configured for web access, Linear and Jira integrations, databases, Playwright, Chrome DevTools, and Vercel.

Why This Matters: With the right MCP setup, Claude stops being a chatbot and starts acting like a junior developer on your team.

A Custom Command for Managing the Whole Development Flow

From Ticket to Pull Request

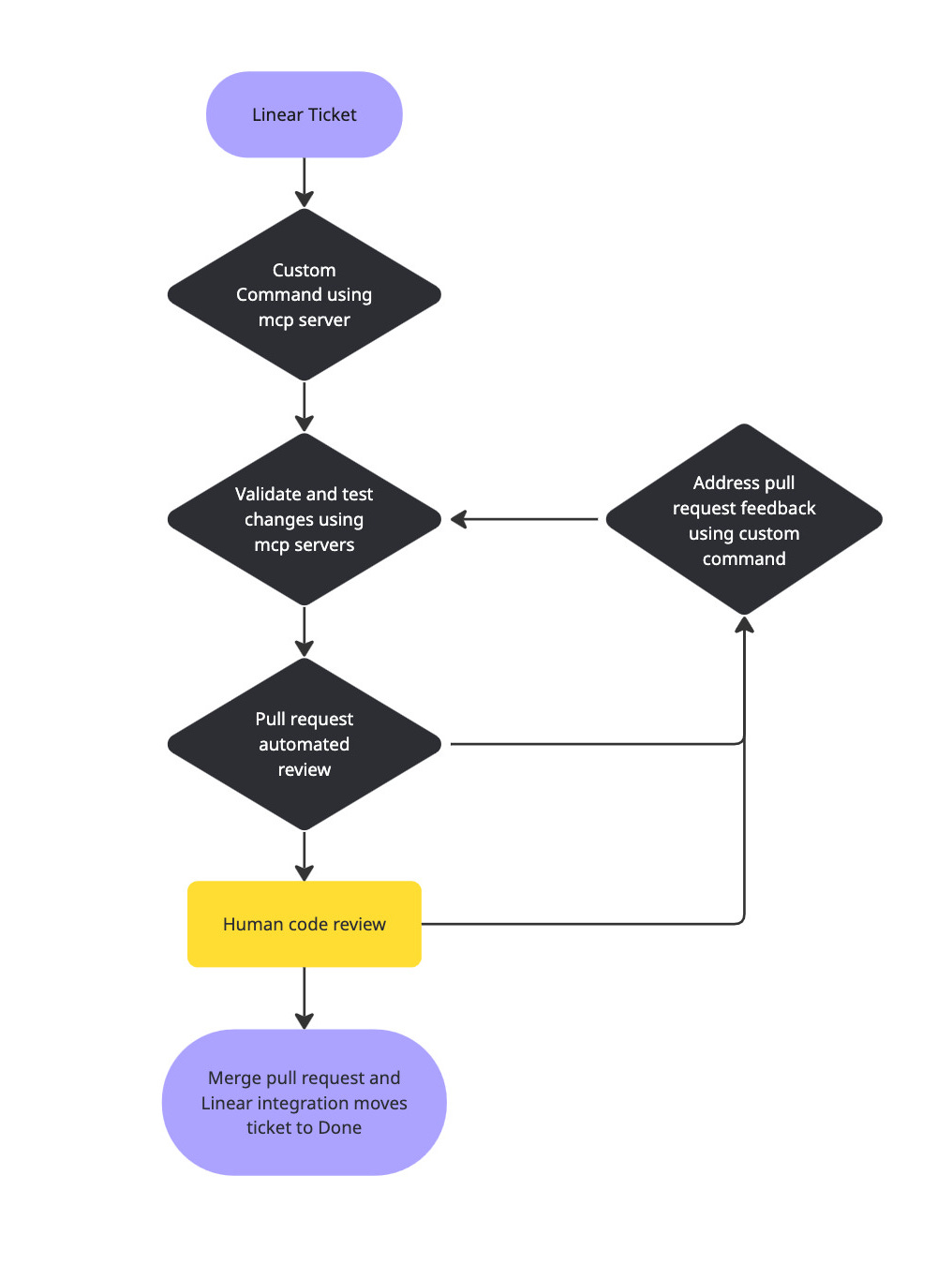

One of my favorite parts of this setup is a custom Claude command I built called /ticket. It automates almost the entire development flow — from picking up a Linear ticket to opening a pull request on GitHub.

Claude uses the Linear MCP server to find the ticket, assign it to me, and move it to “In Progress.” Then it uses the GitHub CLI to create a new branch, plan the implementation, and call my ui-designer sub-agent, if needed. Once the code is written, it runs tests, linting, and orchestrates sub-agents for code review, security analysis, and deployment checks.

Automating Code Review and Cleanup

Closing the Loop

After a pull request is created, my AI agents handle the first round of review. Claude reads the comments, validates which ones are accurate, fixes the issues, reruns checks, and updates the branch.

By the time a human reviewer looks at the PR, most of the repetitive fixes are done. A Linear integration automatically moves the ticket to ‘Done’ once merged, closing the loop.

Practical Lessons from Building It

Start small. You can do a lot with two sub-agents and a couple of MCP tools.

Be explicit. Don’t always rely on the model to decide when to use tools.

Define strong interfaces. Treat sub-agents and tools like microservices.

Test locally. Mock tools before hitting real APIs.

Future Outlook

AI tools are only getting smarter, and they’re changing every day. I’m specifically looking forward to playing around with Claude Skills and learning how they will improve my workflow even further. As the world continues to adopt and implement AI tools, we as developers need to continue to learn and adapt to make use of the valuable tools we now have access to, and to protect ourselves and our code from the drawbacks of those same tools.

The So What

This shift isn’t just about Claude. It’s about how we build with language models. We’re moving from prompting to programming, from guesswork to architecture.

When you structure your workflow with MCP servers, sub-agents, and custom commands, you’re not just writing prompts anymore. You’re building a platform — and that’s where the real magic begins.